| Name: | NVIDIA H100 Tensor Core GPU |

| Model: | H100 |

| Brand: | NVIDIA |

| List Price: | $30,180.00 |

| Price: | $30,180.00 |

| Availability: | In Stock at Global Warehouses. |

| Condition: | New |

| Warranty: | 3 Years |

-

Pay by wire transfer.

Pay by wire transfer. -

Pay with your WebMoney.

Pay with your WebMoney. -

Pay with your Visa credit card.

Pay with your Visa credit card. -

Pay with your Mastercard credit card.

Pay with your Mastercard credit card. -

Pay with your Discover Card.

Pay with your Discover Card.

-

Safe, Fast, 100% Genuine. Your Reliable IT Partner.

-

Best Price Assurance, Bulk Savings, Trusted Worldwide.

This breakthrough in accelerated computing is designed to power the most demanding AI, data analytics, and high-performance computing (HPC) workloads. Based on the innovative NVIDIA Hopper architecture, it delivers next-level performance for training and inference of large AI models, enabling researchers and enterprises to achieve unprecedented computational efficiency.

Featuring up to 80 GB of HBM3 memory and 3.35 TB/s memory bandwidth, it’s engineered to accelerate large language models (LLMs), transformer-based networks, deep learning frameworks, and data-intensive HPC simulations. It supports full-stack AI workflows with compatibility across the NVIDIA AI Enterprise ecosystem.

From data centers and cloud infrastructures to research labs and hyperscale AI deployments, this GPU empowers teams to build, scale, and deploy AI models faster while reducing overall operational costs.

Key Features

- Built on the powerful NVIDIA Hopper GPU architecture

- Up to 80 GB of ultra-fast HBM3 memory

- 3.35 terabytes per second (TB/s) of memory bandwidth

- Enhanced support for Transformer Engine and mixed precision AI compute

- Fully compatible with NVIDIA AI Enterprise software stack, CUDA, Triton, and TensorRT

Target Applications

- Training and deployment of large-scale AI models including GPT, BERT, and LLaMA

- Generative AI model development and inference optimization

- Scientific computing, financial modeling, and advanced simulations

- Enterprise-grade cloud AI infrastructure and data center acceleration

Why Choose This GPU for AI and HPC?

This GPU is built for the future of AI. With advanced architectural features, massive bandwidth, and groundbreaking AI performance, it enables businesses and researchers to push the boundaries of what's possible in machine learning and computational science.

Whether you're scaling AI workloads across clusters or optimizing deep learning pipelines, this solution offers unparalleled speed, scalability, and reliability—making it a premier choice for AI-driven innovation and large-scale deployments.

| Brand | NVIDIA |

| Model | H100 Tensor Core GPU |

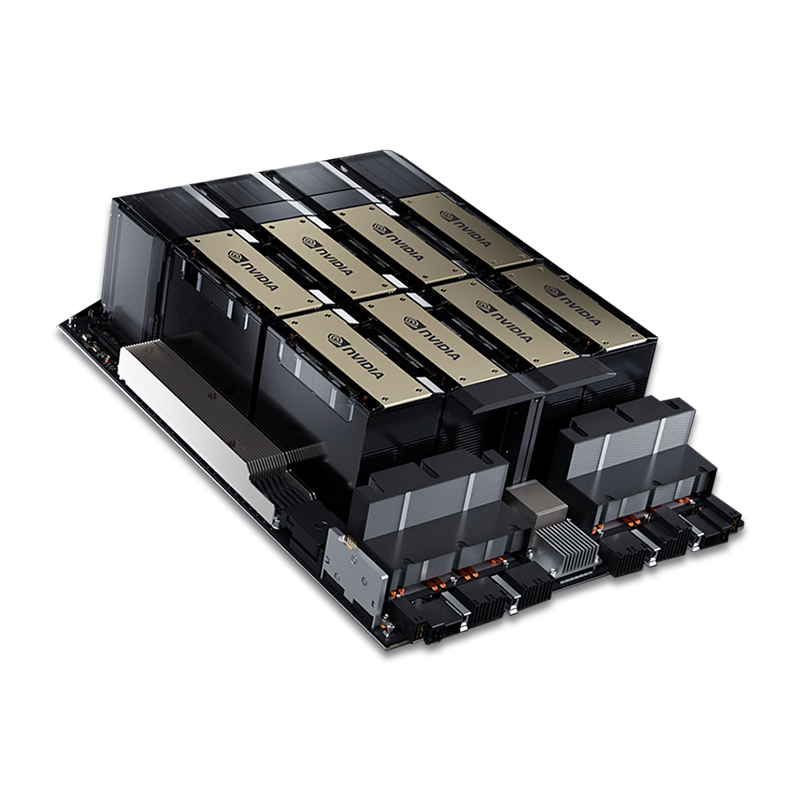

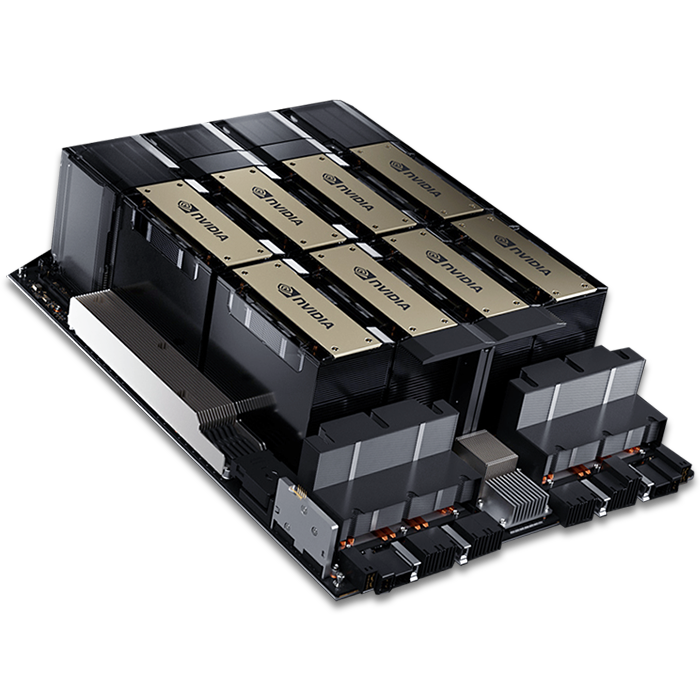

| Form Factor | SXM (H100 SXM), PCIe Dual-Slot Air-Cooled (H100 NVL) |

| Architecture | NVIDIA Hopper |

| FP64 | 34 teraFLOPS (SXM), 30 teraFLOPS (NVL) |

| FP64 Tensor Core | 67 teraFLOPS (SXM), 60 teraFLOPS (NVL) |

| FP32 | 67 teraFLOPS (SXM), 60 teraFLOPS (NVL) |

| TF32 Tensor Core* | 989 teraFLOPS (SXM), 835 teraFLOPS (NVL) |

| BFLOAT16 Tensor Core* | 1,979 teraFLOPS (SXM), 1,671 teraFLOPS (NVL) |

| FP16 Tensor Core* | 1,979 teraFLOPS (SXM), 1,671 teraFLOPS (NVL) |

| FP8 Tensor Core* | 3,958 teraFLOPS (SXM), 3,341 teraFLOPS (NVL) |

| INT8 Tensor Core* | 3,958 TOPS (SXM), 3,341 TOPS (NVL) |

| GPU Memory | 80 GB (SXM), 94 GB (NVL) |

| Memory Bandwidth | 3.35 TB/s (SXM), 3.9 TB/s (NVL) |

| Decoders | 7 NVDEC + 7 JPEG (both variants) |

| Max Thermal Design Power (TDP) | Up to 700W (SXM), 350–400W (PCIe) |

| Multi-Instance GPU (MIG) | Up to 7 MIGs @10GB (SXM), Up to 7 MIGs @12GB (PCIe) |

| Interconnect |

SXM: NVIDIA NVLink 900 GB/s, PCIe Gen5 128 GB/s NVL: NVLink 600 GB/s, PCIe Gen5 128 GB/s |

| Server Platforms |

SXM: NVIDIA HGX H100 & Certified Systems (4–8 GPUs) NVL: NVIDIA DGX H100, Certified Systems (1–8 GPUs) |

| Software Support | Compatible with NVIDIA AI Enterprise (Add-on Included) |

| Warranty | 3 Year |