Back to Top

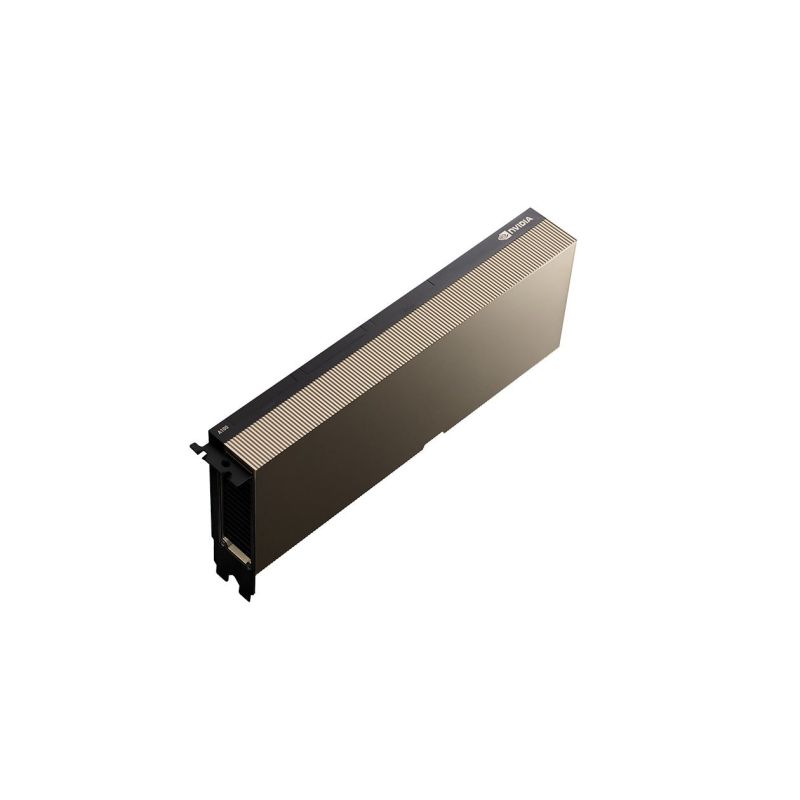

| Name: | NVIDIA A100 80GB TENSOR CORE GPU |

| Model: | A100 |

| Brand: | NVIDIA |

| List Price: | $22,527.00 |

| Price: | $22,527.00 |

| Availability: | In Stock at Global Warehouses. |

| Condition: | New |

| Warranty: | 3 Years |

Shipping:

6–10 Days Based on Address & Pin code

Payment:

-

Pay by wire transfer.

Pay by wire transfer. -

Pay with your WebMoney.

Pay with your WebMoney. -

Pay with your Visa credit card.

Pay with your Visa credit card. -

Pay with your Mastercard credit card.

Pay with your Mastercard credit card. -

Pay with your Discover Card.

Pay with your Discover Card.

Support:

TZS, XAF, TZS payment.

-

Safe, Fast, 100% Genuine. Your Reliable IT Partner.

-

Best Price Assurance, Bulk Savings, Trusted Worldwide.

The NVIDIA A100 Tensor Core GPU is a powerful data center GPU designed to accelerate AI, data analytics, and high-performance computing (HPC) workloads. Built on the NVIDIA Ampere architecture, it delivers unprecedented performance, flexibility, and scalability across all AI and HPC tasks.

Key Features

- 80 GB HBM2e Memory: Ultra-fast memory with up to 2 TB/s bandwidth to support massive models and datasets.

- Multi-Instance GPU (MIG): Allows multiple networks to run simultaneously on a single A100 for maximum resource utilization and isolation.

- Tensor Core Acceleration: Supports mixed-precision computing (FP64, TF32, FP16, INT8) delivering up to 19.5 TFLOPS FP64 and 312 TFLOPS FP16 performance.

- PCIe and SXM Form Factors: Offers flexibility for diverse deployment environments with 250W–400W power options.

Target Applications

- Training and inference of large AI/ML models, including LLMs and GANs

- Data science workloads and analytics at scale

- High-performance computing simulations and scientific workloads

- Cloud-based GPU compute services and virtualized environments

Why Choose This GPU?

The A100 is a flagship GPU solution that delivers massive acceleration for diverse workloads—from AI model training to data analytics. With features like MIG, high memory bandwidth, and seamless compatibility with the NVIDIA software ecosystem (CUDA, TensorRT, RAPIDS), the A100 helps enterprises scale faster, process data more efficiently, and lower total cost of ownership.

Write Your Own Review

| Brand | NVIDIA |

| Model | A100 Tensor Core GPU |

| GPU Architecture | NVIDIA Ampere |

| FP64 Performance | 9.7 TFLOPS |

| FP64 Tensor Core Performance | 19.5 TFLOPS |

| FP32 Performance | 19.5 TFLOPS |

| Tensor Float 32 (TF32) | 156 TFLOPS | 312 TFLOPS* |

| BFLOAT16 Tensor Core | 312 TFLOPS | 624 TFLOPS* |

| FP16 Tensor Core | 312 TFLOPS | 624 TFLOPS* |

| INT8 Tensor Core | 624 TOPS | 1248 TOPS* |

| GPU Memory | 80 GB HBM2e |

| Memory Bandwidth | 1,935 GB/s (PCIe) 2,039 GB/s (SXM) |

| Multi-Instance GPU (MIG) | Up to 7 MIGs @ 10GB |

| Form Factor | PCIe (dual-slot air / single-slot liquid cooled) SXM |

| Max Power Consumption (TDP) | 300W (PCIe) 400W (SXM) |

| Interconnect Bandwidth | PCIe Gen4: 64 GB/s NVLink: 600 GB/s |

| Server Options |

PCIe: 1-8 GPUs in Partner or NVIDIA-Certified Systems SXM: 4, 8, or 16 GPUs in NVIDIA HGX A100 NVIDIA DGX A100 with 8 GPUs |

| Compute APIs | CUDA, NVIDIA TensorRT™, cuDNN, NCCL |

| vGPU Software Support |

NVIDIA AI Enterprise, NVIDIA Virtual Compute Server (vCS), NVIDIA RTX Virtual Workstation (vWS) |

| Warranty | 3 Year |